The Challenges of Data Management

John Macpherson on The Challenges of Data Management

I often get asked, what are the biggest trends impacting the Financial Services industry? Through my position as Chair of the Investment Association Engine, I have unprecedented access to the key decision-makers in the industry, as well as constant connectivity with the ever-expanding Fintech ecosystem, which has helped me stay at the cutting edge of the latest trends.

So, when I get asked, ‘what is the biggest trend that financial services will face’, for the past few years my answer has remained the same, data.

During my time as CEO of BMLL, big data rose to prominence and developed into a multi-billion-dollar problem across financial services. I remember well an early morning interview I gave to CNBC around 5 years ago, where the facts were starkly presented. Back then, data was doubling every three years globally, but at an even faster pace in financial markets.

Firms are struggling under the weight of this data

The use of data is fundamental to a company's operations, but they are finding it difficult to get a handle on this problem. The pace of this increase has left many smaller and mid-sized IM/ AM firms in a quandary. Their ability to access, manage and use multiple data sources alongside their own data, market data, and any alternative data sources, is sub-optimal at best. Most core data systems are not architected to address the volume and pace of change required, with manual reviews and inputs creating unnecessary bottlenecks. These issues, among a host of others, mean risk management systems cannot cope as a result. Modernised data core systems are imperative to solve where real-time insights are currently lost, with fragmented and slow-moving information.

Around half of all financial service data goes unmentioned and ungoverned, this “dark data” poses a security and regulatory risk, as well as a huge opportunity.

While data analytics, big data, AI, and data science are historically the key sub-trends, these have been joined by data fabric (as an industry standard), analytical ops, data democratisation, and a shift from big data to smaller and wider data.

Operating models hold the key to data management

Governance is paramount to using this data in an effective, timely, accurate and meaningful way. Operating models are the true gauge as to whether you are succeeding.

Much can be achieved with the relatively modest budget and resources firms have, provided they invest in the best operating models around their data.

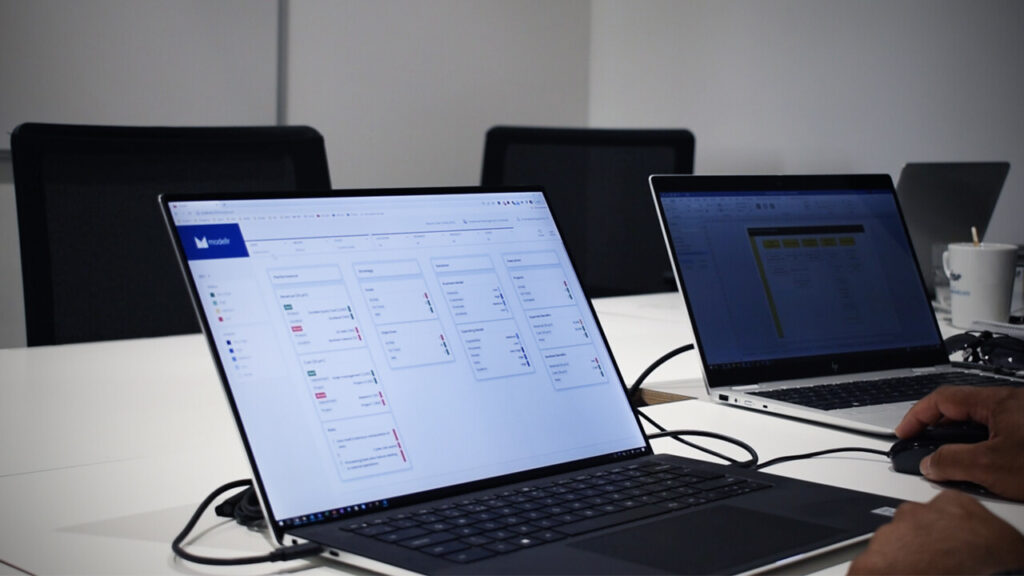

Leading Point is a firm I have been getting to know over several years now. Their data intelligence platform modellr™, is the first truly digital operating model. modellr™ harvests a company’s existing data to create a living operating model, digitising the change process, and enabling quicker, smarter, decision making. By digitising the process, they’re removing the historically slow and laborious consultative approach. Access to all the information in real-time is proving transformative for smaller and medium-sized businesses.

True transparency around your data, understanding it and its consumption, and then enabling data products to support internal and external use cases, is very much available.

Different firms are at very different places on their maturity curve. Longer-term investment in data architecture, be it data fabric or data mesh, will provide the technical backbone to harvest ML/ AI and analytics.

Taking control of your data

Recently I was talking to a large investment bank for whom Leading Point had been brought in to help. The bank was looking to transform its client data management and associated regulatory processes such as KYC, and Anti-financial crime.

They were investing heavily in sourcing, validating, normalising, remediating, and distributing over 2,000 data attributes. This was costing the bank a huge amount of time, money, and resources. But, despite the changes, their environment and change processes had become too complicated to have any chance of success. The process results were haphazard, with poor controls and no understanding of the results missing.

Leading Point was brought in to help and decided on a data minimisation approach. They profiled and analysed the data, despite working across regions and divisions. Quickly, 2,000 data attributes were narrowed to less than 200 critical ones for the consuming functions. This allowed the financial institutions, regulatory, and reporting processes to come to life, with clear data quality measurement and ownership processes. It allowed the financial institutions to significantly reduce the complexity of their data and its usability, meaning that multiple business owners were able to produce rapid and tangible results

I was speaking to Rajen Madan, the CEO of Leading Point, and we agreed that in a world of ever-growing data, data minimisation is often key to maximising success with data!

Elsewhere, Leading Point has seen benefits unlocked from unifying data models, and working on ontologies, standards, and taxonomies. Their platform, modellr™is enabling many firms to link their data, define common aggregations, and support knowledge graph initiatives allowing firms to deliver more timely, accurate and complete reporting, as well as insights on their business processes.

The need for agile, scalable, secure, and resilient tech infrastructure is more imperative than ever. Firms’ own legacy ways of handling this data are singularly the biggest barrier to their growth and technological innovation.

If you see a digital operating model as anything other than a must-have, then you are missing out. It’s time for a serious re-think.

Words by John Macpherson — Board advisor at Leading Point, Chair of the Investment Association Engine

John was recently interviewed about his role at Leading Point, and the key trends he sees affecting the financial services industry. Watch his interview here