AI Under Scrutiny

Why AI risk & governance should be a focus area for financial services firms

Introduction

As financial services firms increasingly integrate artificial intelligence (AI) into their operations, the imperative to focus on AI risk & governance becomes paramount. AI offers transformative potential, driving innovation, enhancing customer experiences, and streamlining operations. However, with this potential comes significant risks that can undermine the stability, integrity, and reputation of financial institutions. This article delves into the critical importance of AI risk & governance for financial services firms, providing a detailed exploration of the associated risks, regulatory landscape, and practical steps for effective implementation. Our goal is to persuade financial services firms to prioritise AI governance to safeguard their operations and ensure regulatory compliance.

The Growing Role of AI in Financial Services

AI adoption in the financial services industry is accelerating, driven by its ability to analyse vast amounts of data, automate complex processes, and provide actionable insights. Financial institutions leverage AI for various applications, including fraud detection, credit scoring, risk management, customer service, and algorithmic trading. According to a report by McKinsey & Company, AI could potentially generate up to $1 trillion of additional value annually for the global banking sector.

Applications of AI in Financial Services

1 Fraud Detection and Prevention: AI algorithms analyse transaction patterns to identify and prevent fraudulent activities, reducing losses and enhancing security.

2 Credit Scoring and Risk Assessment: AI models evaluate creditworthiness by analysing non-traditional data sources, improving accuracy and inclusivity in lending decisions.

3 Customer Service and Chatbots: AI-powered chatbots and virtual assistants provide 24/7 customer support, while machine learning algorithms offer personalised product recommendations.

4 Personalised Financial Planning: AI-driven platforms offer tailored financial advice and investment strategies based on individual customer profiles, goals, and preferences, enhancing client engagement and satisfaction.

Potential Benefits of AI

The benefits of AI in financial services are manifold, including increased efficiency, cost savings, enhanced decision-making, and improved customer satisfaction. AI-driven automation reduces manual workloads, enabling employees to focus on higher-value tasks. Additionally, AI's ability to uncover hidden patterns in data leads to more informed and timely decisions, driving competitive advantage.

The Importance of AI Governance

AI governance encompasses the frameworks, policies, and practices that ensure the ethical, transparent, and accountable use of AI technologies. It is crucial for managing AI risks and maintaining stakeholder trust. Without robust governance, financial services firms risk facing adverse outcomes such as biased decision-making, regulatory penalties, reputational damage, and operational disruptions.

Key Components of AI Governance

1 Ethical Guidelines: Establishing ethical principles to guide AI development and deployment, ensuring fairness, accountability, and transparency.

2 Risk Management: Implementing processes to identify, assess, and mitigate AI-related risks, including bias, security vulnerabilities, and operational failures.

3 Regulatory Compliance: Ensuring adherence to relevant laws and regulations governing AI usage, such as data protection and automated decision-making.

4 Transparency and Accountability: Promoting transparency in AI decision-making processes and holding individuals and teams accountable for AI outcomes.

Risks of Neglecting AI Governance

Neglecting AI governance can lead to several significant risks:

1 Embedded bias: AI algorithms can unintentionally perpetuate biases if trained on biased data or if developers inadvertently incorporate them. This can lead to unfair treatment of certain groups and potential violations of fair lending laws.

2 Explainability and complexity: AI models can be highly complex, making it challenging to understand how they arrive at decisions. This lack of explainability raises concerns about transparency, accountability, and regulatory compliance

3 Cybersecurity: Increased reliance on AI systems raises cybersecurity concerns, as hackers may exploit vulnerabilities in AI algorithms or systems to gain unauthorised access to sensitive financial data

4 Data privacy: AI systems rely on vast amounts of data, raising privacy concerns related to the collection, storage, and use of personal information

5 Robustness: AI systems may not perform optimally in certain situations and are susceptible to errors. Adversarial attacks can compromise their reliability and trustworthiness

6 Impact on financial stability: Widespread adoption of AI in the financial sector can have implications for financial stability, potentially amplifying market dynamics and leading to increased volatility or systemic risks

7 Underlying data risks: AI models are only as good as the data that supports them. Incorrect or biased data can lead to inaccurate outputs and decisions

8 Ethical considerations: The potential displacement of certain roles due to AI automation raises ethical concerns about societal implications and firms' responsibilities to their employees

9 Regulatory compliance: As AI becomes more integral to financial services, there is an increasing need for transparency and regulatory explainability in AI decisions to maintain compliance with evolving standards

10 Model risk: The complexity and evolving nature of AI technologies mean that their strengths and weaknesses are not yet fully understood, potentially leading to unforeseen pitfalls in the future

To address these risks, financial institutions need to implement robust risk management frameworks, enhance data governance, develop AI-ready infrastructure, increase transparency, and stay updated on evolving regulations specific to AI in financial services.

The consequences of inadequate AI governance can be severe. Financial institutions that fail to implement proper risk management and governance frameworks may face significant financial penalties, reputational damage, and regulatory scrutiny. The proposed EU AI Act, for instance, outlines fines of up to €30 million or 6% of global annual turnover for non-compliance. Beyond regulatory consequences, poor AI governance can lead to biased decision-making, privacy breaches, and erosion of customer trust, all of which can have long-lasting impacts on a firm's operations and market position.

Regulatory Requirements

The regulatory landscape for AI in financial services is evolving rapidly, with regulators worldwide introducing guidelines and standards to ensure the responsible use of AI. Compliance with these regulations is not only a legal obligation but also a critical component of building a sustainable and trustworthy AI strategy.

Key Regulatory Frameworks

1 General Data Protection Regulation (GDPR): The European Union's GDPR imposes strict requirements on data processing and the use of automated decision-making systems, ensuring transparency and accountability.

2 Financial Conduct Authority (FCA): The FCA in the UK has issued guidance on AI and machine learning, emphasising the need for transparency, accountability, and risk management in AI applications.

3 Federal Reserve: The Federal Reserve in the US has provided supervisory guidance on model risk management, highlighting the importance of robust governance and oversight for AI models.

4 Monetary Authority of Singapore (MAS): MAS has introduced guidelines for the ethical use of AI and data analytics in financial services, promoting fairness, ethics, accountability, and transparency (FEAT).

5 EU AI Act: This new act aims to protect fundamental rights, democracy, the rule of law and environmental sustainability from high-risk AI, while boosting innovation and establishing Europe as a leader in the field. The regulation establishes obligations for AI based on its potential risks and level of impact.

Importance of Compliance

Compliance with regulatory requirements is essential for several reasons:

1 Legal Obligation: Financial services firms must adhere to laws and regulations governing AI usage to avoid legal penalties and fines.

2 Reputational Risk: Non-compliance can damage a firm's reputation, eroding trust with customers, investors, and regulators.

3 Operational Efficiency: Regulatory compliance ensures that AI systems are designed and operated according to best practices, enhancing efficiency and effectiveness.

4 Stakeholder Trust: Adhering to regulatory standards builds trust with stakeholders, demonstrating a commitment to responsible and ethical AI use.

Identifying AI Risks

AI technologies pose several specific risks to financial services firms that must be identified and mitigated through effective governance frameworks.

Bias and Discrimination

AI systems can reflect and reinforce biases present in training data, leading to discriminatory outcomes. For instance, biased credit scoring models may disadvantage certain demographic groups, resulting in unequal access to financial services. Addressing bias requires rigorous data governance practices, including diverse and representative training data, regular bias audits, and transparent decision-making processes.

Security Risks

AI systems are vulnerable to various security threats, including cyberattacks, data breaches, and adversarial manipulations. Cybercriminals can exploit vulnerabilities in AI models to manipulate outcomes or gain unauthorised access to sensitive financial data. Ensuring the security and integrity of AI systems involves implementing robust cybersecurity measures, regular security assessments, and incident response plans.

Operational Risks

AI-driven processes can fail or behave unpredictably under certain conditions, potentially disrupting critical financial services. For example, algorithmic trading systems can trigger market instability if not responsibly managed. Effective governance frameworks include comprehensive testing, continuous monitoring, and contingency planning to mitigate operational risks and ensure reliable AI performance.

Compliance Risks

Failure to adhere to regulatory requirements can result in significant fines, legal consequences, and reputational damage. AI systems must be designed and operated in compliance with relevant laws and regulations, such as data protection and automated decision-making guidelines. Regular compliance audits and updates to governance frameworks are essential to ensure ongoing regulatory adherence.

Benefits of Effective AI Governance

Implementing robust AI governance frameworks offers numerous benefits for financial services firms, enhancing risk management, trust, and operational efficiency.

Risk Mitigation

Effective AI governance helps identify, assess, and mitigate AI-related risks, reducing the likelihood of adverse outcomes. By implementing comprehensive risk management processes, firms can proactively address potential issues and ensure the safe and responsible use of AI technologies.

Enhanced Trust and Transparency

Transparent and accountable AI practices build trust with customers, regulators, and other stakeholders. Clear communication about AI decision-making processes, ethical guidelines, and risk management practices demonstrates a commitment to responsible AI use, fostering confidence and credibility.

Regulatory Compliance

Adhering to governance frameworks ensures compliance with current and future regulatory requirements, minimising legal and financial repercussions. Robust governance practices align AI development and deployment with regulatory standards, reducing the risk of non-compliance and associated penalties.

Operational Efficiency

Governance frameworks streamline the development and deployment of AI systems, promoting efficiency and consistency in AI-driven operations. Standardised processes, clear roles and responsibilities, and ongoing monitoring enhance the effectiveness and reliability of AI applications, driving operational excellence.

Case Studies

Several financial services firms have successfully implemented AI governance frameworks, demonstrating the tangible benefits of proactive risk management and responsible AI use.

JP Morgan Chase

JP Morgan Chase has established a comprehensive AI governance structure that includes an AI Ethics Board, regular audits, and robust risk assessment processes. The AI Ethics Board oversees the ethical implications of AI applications, ensuring alignment with the bank's values and regulatory requirements. Regular audits and risk assessments help identify and mitigate AI-related risks, enhancing the reliability and transparency of AI systems.

ING Group

ING Group has developed an AI governance framework that emphasises transparency, accountability, and ethical considerations. The framework includes guidelines for data usage, model validation, and ongoing monitoring, ensuring that AI applications align with the bank's values and regulatory requirements. By prioritising responsible AI use, ING has built trust with stakeholders and demonstrated a commitment to ethical and transparent AI practices.

HSBC

HSBC has implemented a robust AI governance framework that focuses on ethical AI development, risk management, and regulatory compliance. The bank's AI governance framework includes a dedicated AI Ethics Committee, comprehensive risk management processes, and regular compliance audits. These measures ensure that AI applications are developed and deployed responsibly, aligning with regulatory standards and ethical guidelines.

Practical Steps for Implementation

To develop and implement effective AI governance frameworks, financial services firms should consider the following actionable steps:

Establish a Governance Framework

Develop a comprehensive AI governance framework that includes policies, procedures, and roles and responsibilities for AI oversight. The framework should outline ethical guidelines, risk management processes, and compliance requirements, providing a clear roadmap for responsible AI use.

Create an AI Ethics Board

Form an AI Ethics Board or committee to oversee the ethical implications of AI applications and ensure alignment with organisational values and regulatory requirements. The board should include representatives from diverse departments, including legal, compliance, risk management, and technology.

Implement Specific AI Risk Management Processes

Conduct regular risk assessments to identify and mitigate AI-related risks. Implement robust monitoring and auditing processes to ensure ongoing compliance and performance. Risk management processes should include bias audits, security assessments, and contingency planning to address potential operational failures.

Ensure Data Quality and Integrity

Establish data governance practices to ensure the quality, accuracy, and integrity of data used in AI systems. Address potential biases in data collection and processing, and implement measures to maintain data security and privacy. Regular data audits and validation processes are essential to ensure reliable and unbiased AI outcomes.

Invest in Training and Awareness

Provide training and resources for employees to understand AI technologies, governance practices, and their roles in ensuring ethical and responsible AI use. Ongoing education and awareness programs help build a culture of responsible AI use, promoting adherence to governance frameworks and ethical guidelines.

Engage with Regulators and Industry Bodies

Stay informed about regulatory developments and industry best practices. Engage with regulators and industry bodies to contribute to the development of AI governance standards and ensure alignment with evolving regulatory requirements. Active participation in industry forums and collaborations helps stay ahead of regulatory changes and promotes responsible AI use.

Conclusion

As financial services firms continue to embrace AI, the importance of robust AI risk & governance frameworks cannot be overstated. By proactively addressing the risks associated with AI and implementing effective governance practices, firms can unlock the full potential of AI technologies while safeguarding their operations, maintaining regulatory compliance, and building trust with stakeholders. Prioritising AI risk & governance is not just a regulatory requirement but a strategic imperative for the sustainable and ethical use of AI in financial services.

References and Further Reading

- McKinsey & Company. (2020). The AI Bank of the Future: Can Banks Meet the AI Challenge?

- European Union. (2018). General Data Protection Regulation (GDPR).

- Financial Conduct Authority (FCA). (2019). Guidance on the Use of AI and Machine Learning in Financial Services.

- Federal Reserve. (2020). Supervisory Guidance on Model Risk Management.

- JP Morgan Chase. (2021). AI Ethics and Governance Framework.

- ING Group. (2021). Responsible AI: Our Approach to AI Governance.

- Monetary Authority of Singapore (MAS). (2019). FEAT Principles for the Use of AI and Data Analytics in Financial Services.

For further reading on AI governance and risk management in financial services, consider the following resources:

- "Artificial Intelligence: A Guide for Financial Services Firms" by Deloitte

- "Managing AI Risk in Financial Services" by PwC

- "AI Ethics and Governance: A Global Perspective" by the World Economic Forum

Strengthening Information Security

The Combined Power of Identity & Access Management and Data Access Controls

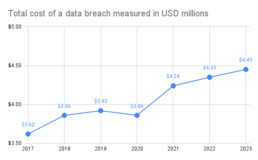

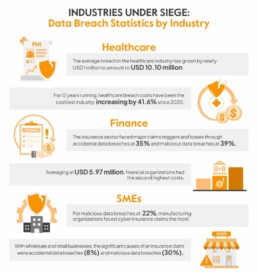

The digital age presents a double-edged sword for businesses. While technology advancements offer exciting capabilities in cloud, data analytics, and customer experience, they also introduce new security challenges. Data breaches are a constant threat, costing businesses an average of $4.45 million per incident according to a 2023 IBM report (https://www.ibm.com/reports/data-breach) and eroding consumer trust. Traditional security measures often fall short, leaving vulnerabilities for attackers to exploit. These attackers, targeting poorly managed identities and weak data protection, aim to disrupt operations, steal sensitive information, or even hold companies hostage. The impact extends beyond the business itself, damaging customers, stakeholders, and the broader financial market

In response to these evolving threats, the European Commission (EU) has implemented the Digital Operational Resilience Act (DORA) (Regulation (EU) 2022/2554). This regulation focuses on strengthening information and communications technology (ICT) resilience standards in the financial services sector. While designed for the EU, DORA’s requirements offer valuable insights for businesses globally, especially those with operations in the EU or the UK. DORA mandates that financial institutions define, approve, oversee, and be accountable for implementing a robust risk-management framework. This is where identity & access management (IAM) and data access controls (DAC).

The Threat Landscape and Importance of Data Security

Data breaches are just one piece of the security puzzle. Malicious entities also employ malware, phishing attacks, and even exploit human error to gain unauthorised access to sensitive data. Regulatory compliance further emphasises the importance of data security. Frameworks like GDPR and HIPAA mandate robust data protection measures. Failure to comply can result in hefty fines and reputational damage.

Organisations, in a rapidly-evolving hybrid working environment, urgently need to implement or review their information security strategy. This includes solutions that not only reduce the attack surface but also improve control over who accesses what data within the organisation. IAM and DAC, along with fine-grained access provisioning for various data formats, are critical components of a strong cybersecurity strategy.

Keep reading to learn the key differences between IAM and DAC, and how they work in tandem to create a strong security posture.

Identity & Access Management (IAM)

Think of IAM as the gatekeeper to your digital environment. It ensures only authorised users can access specific systems and resources. Here is a breakdown of its core components:

- Identity Management (authentication): This involves creating, managing, and authenticating user identities. IAM systems manage user provisioning (granting access), authentication (verifying user identity through methods like passwords or multi-factor authentication [MFA]), and authorisation (determining user permissions). Common identity management practices include:

- Single Sign-On (SSO): Users can access multiple applications with a single login, improving convenience and security.

- Multi-Factor Authentication (MFA):An extra layer of security requiring an additional verification factor beyond a password (e.g., fingerprint, security code).

- Passwordless: A recent usability improvement removes the use of passwords and replaces them with authentication apps and biometrics.

- Adaptive or Risk-based Authentication: Uses AI and machine learning to analyse user behaviour and adjust authentication requirements in real-time based on risk level.

- Access Management (authorisation): Once a user has had their identity authenticated, then access management checks to see what resources the user has access to. IAM systems apply tailored access policies based on user identities and other attributes. Once verified, IAM controls access to applications, data, and other resources.

Advanced IAM concepts like Privileged Access Management (PAM) focus on securing access for privileged users with high-level permissions, while Identity Governance ensures user access is reviewed and updated regularly.

Data Access Control (DAC)

While IAM focuses on user identities and overall system access, DAC takes a more granular approach, regulating access to specific data stored within those systems. Here are some common DAC models:

- Discretionary Access Control (also DAC): Allows data owners to manage access permissions for other users. While offering flexibility, it can lead to inconsistencies and security risks if not managed properly. One example of this is UNIX files, where an owner of a file can grant or deny other users access.

- Mandatory Access Control (MAC): Here, the system enforces access based on pre-defined security labels assigned to data and users. This offers stricter control but requires careful configuration.

- Role-Based Access Control (RBAC): This approach complements IAM RBAC by defining access permissions for specific data sets based on user roles.

- Attribute-Based Access Control (ABAC): Permissions are granted based on a combination of user attributes, data attributes, and environmental attributes, offering a more dynamic and contextual approach.

- Encryption: Data is rendered unreadable without the appropriate decryption key, adding another layer of protection.

IAM vs. DAC: Key Differences and Working Together

While IAM and DAC serve distinct purposes, they work in harmony to create a comprehensive security posture. Here is a table summarising the key differences:

FEATURE

IAM

DAC

Description

Controls access to applications

Controls access to data within applications

Granularity

Broader – manages access to entire systems

More fine-grained – controls access to specific data check user attributes

Enforcement

User-based (IAM) or system-based (MAC)

System-based enforcement (MAC) or user-based (DAC)

Imagine an employee accessing customer data in a CRM system. IAM verifies their identity and grants access to the CRM application. However, DAC determines what specific customer data they can view or modify based on their role (e.g., a sales representative might have access to contact information but not financial details).

Dispelling Common Myths

Several misconceptions surround IAM and DAC. Here is why they are not entirely accurate:

- Myth 1: IAM is all I need. The most common mistake that organisations make is to conflate IAM and DAC, or worse, assume that if they have IAM, that includes DAC. Here is a hint. It does not.

- Myth 2: IAM is only needed by large enterprises. Businesses of all sizes must use IAM to secure access to their applications and ensure compliance. Scalable IAM solutions are readily available.

- Myth 3: More IAM tools equal better security. A layered approach is crucial. Implementing too many overlapping IAM tools can create complexity and management overhead. Focus on choosing the right tools that complement each other and address specific security needs.

- Myth 4: Data access control is enough for complete security. While DAC plays a vital role, it is only one piece of the puzzle. Strong IAM practices ensure authorised users are accessing systems, while DAC manages their access to specific data within those systems. A comprehensive security strategy requires both.

Tools for Effective IAM and DAC

There are various IAM and DAC solutions available, and the best choice depends on your specific needs. While Active Directory remains a popular IAM solution for Windows-based environments, it may not be ideal for complex IT infrastructures or organisations managing vast numbers of users and data access needs.

Imagine a scenario where your application has 1,000 users and holds sensitive & personal customer information for 1,000,000 customers split across ten countries and five products. Not every user should see every customer record. It might be limited to the country the user works in and the specific product they support. This is the “Principle of Least Privilege.” Applying this principle is critical to demonstrating you have appropriate data access controls.

To control access to this data, you would need to create tens of thousands of AD groups for every combination of country or countries and product or products. This is unsustainable and makes choosing AD groups to manage data access control an extremely poor choice.

The complexity of managing nested AD groups and potential integration challenges with non-Windows systems highlight the importance of carefully evaluating your specific needs when choosing IAM tools. Consider exploring cloud-based IAM platforms or Identity Governance and Administration (IGA) solutions for centralised management and streamlined access control.

Building a Strong Security Strategy

The EU’s Digital Operational Resilience Act (DORA) emphasises strong IAM practices for financial institutions and will coming into act from 17 January 2025. DORA requires financial organisations to define, approve, oversee, and be accountable for implementing robust IAM and data access controls as part of their risk management framework.

Here are some key areas where IAM and DAC can help organisations comply with DORA and protect themselves:

DORA Pillar

How IAM helps

How DAC helps

ICT risk management

- Identifies risks associated with unauthorised access/misuse

- Detects users with excessive permissions or dormant accounts

- Minimises damage from breaches by restricting access to specific data

ICT related incident reporting

- Provides audit logs for investigating breaches (user activity, login attempts, accessed resources)

- Helps identify source of attack and compromised accounts

- Helps determine scope of breach and potentially affected information

ICT third-party risk management

- Manages access for third-party vendors/partners

- Grants temporary access with limited permissions, reducing attack surface

- Restricts access for third-party vendors by limiting ability to view/modify sensitive data

Information sharing

- Permissions designated users authorised to share sensitive information

- Controls access to shared information via roles and rules

Digital operational resilience testing

- Enables testing of IAM controls to identify vulnerabilities

- Penetration testing simulates attacks to assess effectiveness of IAM controls

- Ensures data access restrictions are properly enforced and minimizes breach impact

Understanding IAM and DAC empowers you to build a robust data security strategy

Use these strategies to leverage the benefits of IAM and DAC combined:

- Recognise the difference between IAM and DAC, and how they are implemented in your organisation

- Conduct regular IAM and DAC audits to identify and address vulnerabilities

- Implement best practices like the Principle of Least Privilege (granting users only the minimum access required for their job function)

- Regularly review and update user access permissions

- Educate employees on security best practices (e.g., password hygiene, phishing awareness)

Explore different IAM and DAC solutions based on your specific organisational needs and security posture. Remember, a layered approach that combines IAM, DAC, and other security measures like encryption creates the most effective defence against data breaches and unauthorised access.

Conclusion

By leveraging the combined power of IAM and DAC, you can ensure only the right people have access to the right data at the right time. This fosters trust with stakeholders, protects your reputation, and safeguards your valuable information assets.

Top 5 Trends for MLROs in 2024

Our Financial Crime Practice Lead, Kavita Harwani, recently attended the FRC Leadership Convention at the Celtic Manor, Newport, Wales. This gave us the opportunity to engage with senior leaders in the financial risk and compliance space on the latest best practices, upcoming technology advances, and practical insights.

Criminals are becoming increasingly sophisticated, driving MLROs to innovate their financial crime controls. There is never a quiet time for FRC professionals, but 2024 is proving to be exceptionally busy.

Our view on the top five trends that MLROs need to focus on is presented here.

Top 5 Trends

- Minimise costs by using technology to scan the regulatory horizon and identify impacts on your business

- Accelerating transaction monitoring & decisioning by applying AI & data analytics

- Optimising due diligence with a 360 view of the customers

- Improving operational efficiency by using machine learning to automate alert handling

- Reducing financial crime risk through training and communications programmes.

1. Regulatory Compliance and Adaptation

MLROs need to stay abreast of evolving regulatory frameworks and compliance requirements. With regulatory changes occurring frequently, MLROs must ensure their organisations are compliant with the latest anti-money laundering (AML) and counter-terrorist financing (CTF) regulations.

This involves scanning the regulatory horizon, updating policies, procedures, and systems to reflect regulatory updates and adapting swiftly to new compliance challenges.

2. Technology & Data Analytics

MLROs will increasingly leverage advanced technology and data analytics tools to enhance their AML capabilities.

Machine learning algorithms and predictive analytics can help identify suspicious activities more effectively, allowing MLROs to detect and prevent money laundering and financial crime quicker, at lower cost, and with higher accuracy rates.

MLROs must focus on implementing robust AML technologies and optimising data analytics strategies to improve risk detection and decision-making processes.

3. Customer Due Diligence (CDD) and Enhanced Due Diligence (EDD)

MLROs should prioritise strengthening CDD processes to better understand their customers’ risk of committing financial crimes.

Enhanced due diligence is critical for high-risk customers, such as politically exposed persons (PEPs) and high net worth individuals (HNWIs).

MLROs should focus on enhancing risk-based approaches to CDD and EDD, leveraging technology and data analytics to streamline customer onboarding processes while maintaining compliance with regulatory requirements.

4. Transaction Monitoring and Suspicious Activity Reporting

MLROs will continue to refine transaction monitoring systems to effectively identify suspicious activities and generate accurate alerts for investigation.

MLROs should focus on optimising transaction monitoring rules and scenarios to reduce false positives and prioritise high-risk transactions for further review.

Enhanced collaboration with law enforcement agencies and financial intelligence units will be crucial for timely and accurate suspicious activity reporting. Cross-industry collaboration is an expanding route to quicker insights on bad actors and behaviours.

5. Training and Awareness Programmes

MLROs must invest in comprehensive training and awareness programs to educate employees on AML risks, obligations, and best practices.

Building a strong culture of compliance within the organisation is essential for effective AML risk management.

Additionally, MLROs must promote a proactive approach to AML compliance, encouraging employees to raise concerns and seek guidance when faced with potential AML risks.

Conclusion

The expanded use of technology and data is becoming more evident from our discussions. The latest, ever-accelerating, improvements in automation and AI has brought a new set of opportunities to transform legacy manual, people-heavy processes into streamlined, efficient, and effective anti-financial crime departments.

Leading Point has a specialist financial crime team and can help strengthen your operations and meet these challenges in 2024. Reach out to our practice lead Kavita Harwani on kavita@leadingpoint.io to discuss your needs further.

Helping a leading investment bank improve its client on-boarding processes into a single unified operating model

Our client, like many banks, were facing multiple challenges in their onboarding and account opening processes. Scalability and efficiency were two important metrics we were asked to improve. Our senior experts interviewed the onboarding teams to document the current process and recommended a new unified process covering front, middle and back office teams.

We identified and removed key-person dependencies and documented the new process into a key operating manual for global use.

Helping a global investment bank design & execute a client data governance target operating model

Our client had a challenge to evidence control of their 2000+ client data elements. We were asked to implement a new target operating model for client data governance in six months. Our approach was to identify the core, essential data elements used by the most critical business processes and start governance for these, including data ownership and data quality.

We delivered business capability models, data governance processes, data quality rules & reporting, global support coverage for 100+ critical data elements supporting regulatory reporting and risk.

Helping a global investment bank reduce its residual risk with a target operating model

Our client asked us to provide operating model design & governance expertise for its anti-financial crime (AFC) controls. We reviewed and approved the bank’s AFC target operating model using our structured approach, ensuring designs were compliant with regulations, aligned to strategy, and delivered measurable outcomes.

We delivered clear designs with capability impact maps, process models, and system & data architecture diagrams, enabling change teams to execute the AFC strategy.

Helping a Japanese investment bank to develop & execute their trading front-to-bank operating model

Our client wanted to increase their trading efficiency by improving their data sourcing processes and resource efficiency in a multi-year programme. We analysed over 3,500 data feeds from 50 front office systems and over 100 reconciliations to determine how best to optimise their data.

Streamlining their data usage and operational processes is estimated to save them 20-30% costs over the next five years.

Improving a DLT FinTech's operations enabling rapid scaling in target markets

"Leading Point brings a top-flight management team, a reputation for quality and professionalism, and will heighten the value of [our] applications through its extensive knowledge of operations in the financial services sector."

Chief Risk Officer at DLT FinTech

Increasing data product offerings by profiling 80k terms at a global data provider

“Through domain & technical expertise Leading Point have been instrumental in the success of this project to analyse and remediate 80k industry terms. LP have developed a sustainable process, backed up by technical tools, allowing the client to continue making progress well into the future. I would have no hesitation recommending LP as a delivery partner to any firm who needs help untangling their data.”

PM at Global Market Data Provider

Catch the Multi-Cloud Wave

Charting Your Course

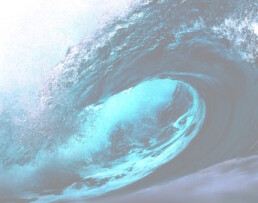

The digital realm is a constant current, pulling businesses towards new horizons. Today, one of the most significant tides shaping the landscape is the surge of multi-cloud adoption. But what exactly is driving this trend, and is your organisation prepared to ride the wave?

At its core, multi-cloud empowers businesses to break free from the constraints of a single cloud provider. Imagine cherry-picking the best services from different cloud vendors, like selecting the perfect teammates for a sailing crew. In 2022, 92% of firms either had or were considering a multi-cloud strategy (1). Having a strategy is one thing. Implementing it is a very different story. It takes meticulous planning and preparation. The potential of migrating from a single cloud provider to a multi-cloud environment can be huge if you are dealing with vast volumes of data. This flexibility unlocks a treasure trove of benefits.

1 Faction - The Continued Growth of Multi-Cloud and Hybrid Infrastructure

Top 4 Benefits

1 Unmatched Agility

Respond to ever-changing demands with ease by scaling resources up or down. Multi-cloud lets you ditch the "one-size-fits-all" approach and tailor your cloud strategy to your specific needs, fostering innovation and efficiency

2 Resilience in the Face of the Storm

Don't let cloud downtime disrupt your operations. By distributing your workload across multiple providers, you create a safety net that ensures uninterrupted service even when one encounters an issue.

3 A World of Choice at Your Fingertips

No single cloud provider can be all things to all businesses. Multi-cloud empowers you to leverage the unique strengths of different vendors, giving you access to a diverse array of services and optimising your overall offering.

4 Future-Proofing Your Digital Journey

The tech landscape is a whirlwind of innovation. With multi-cloud, you're not tethered to a single provider's roadmap. Instead, you have the freedom to seamlessly adapt to emerging technologies and trends, ensuring you stay ahead of the curve.

Cost Meets the Cloud

Perhaps the most exciting development propelling multi-cloud adoption is the shrinking cost barrier. As cloud providers engage in fierce competition, prices are driving down, making multi-cloud solutions more accessible for businesses of all sizes. This cost optimisation, coupled with the strategic advantages mentioned earlier, makes multi-cloud an increasingly attractive proposition. However, a word of caution: While the overall trend is towards affordability, navigating the multi-cloud landscape still requires meticulous planning and cost management. Without proper controls and precise resource allocation, you risk increased expenses and potential setbacks. With increased distribution of data, comes the increased risk of data leakage. Not only must data be protected within each cloud environment, it needs to be protected across the multi-cloud. Data monitoring increases in complexity. As data needs to move between cloud solutions, there may be additional latency risks. These can be mitigated with good risk controls and monitoring.

Kicking Off Your Journey

Ditch single-provider limitations and enjoy flexibility, resilience, and a wider range of services to boost your digital transformation but remember…

Multi-cloud environments can heighten security risks.

Navigate cautiously with proper controls and expert guidance to avoid hidden expenses.

Fierce competition is lowering multi-cloud barriers.

Let Leading Point be your guide, helping you set sail on the multi-cloud journey with confidence and unlock its full potential.

The multi-cloud path isn't without its challenges, but the rewards are undeniable. At Leading Point, we're experts in helping businesses navigate the multi-cloud wave with confidence. Let us help you unlock the full potential of multi-cloud for a more resilient, flexible, and innovative future. So, is your organisation ready to catch the wave? Contact Leading Point today and start your multi-cloud journey!

Unlocking the opportunity of vLEIs

Streamlining financial services workflows with Verifiable Legal Entity Identifiers (vLEIs)

Source: GLIEF

Trust is hard to come by

How do you trust people you have never met in businesses you have never dealt with before? It was difficult 20 years ago and even more so today. Many checks are needed to verify if the person you are talking to is the person you think it is. Do they even work for the business they claim to represent? Failures of these checks manifest themselves every day with spear phishing incidents hitting the headlines, where an unsuspecting clerk is badgered into making a payment to a criminal’s account by a person claiming to be a senior manager.

With businesses increasing their cross-border business and more remote working, it is getting harder and harder to trust what you see in front of you. How do financial services firms reduce the risk of cybercrime attacks? At a corporate level, there are Legal Entity Identifiers (LEIs) which have been a requirement for regulated financial services businesses to operate in capital markets, OTC derivatives, fund administration or debt issuance.

LEIs are issued by Local Operating Units (LOUs). These are bodies that are accredited by GLEIF (Global Legal Entity Identifier Foundation) to issue LEIs. Examples of LOUs are the London Stock Exchange Group (LSEG) and Bloomberg. However, LEIs only work at a legal entity level for an organisation. LEIs are not used for individuals within organisations.

Establishing trust at this individual level is critical to reducing risk and establishing digital trust is key to streamlining workflows in financial services, like onboarding, trade finance, and anti-financial crime.

This is where Verifiable Legal Entity Identifiers (vLEIs) come into the picture.

What is the new vLEI initiative and how will it be used?

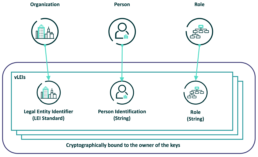

Put simply, vLEIs combine the organisation’s identity (the existing LEI), a person, and the role they play in the organisation into a cryptographically-signed package.

GLEIF has been working to create a fully digitised LEI service enabling instant and automated identity verification between counterparties across the globe. This drive for instant automation has been made possible by developments in blockchain technology, self-sovereign identity (SSI) and other decentralised key management platforms (Introducing the verifiable LEI (vLEI), GLEIF website).

vLEIs are secure digitally-signed credentials and a counterpart of the LEI, which is a unique 20-digit alphanumeric ISO-standardised code used to represent a single legal organisation. The vLEI cryptographically encompasses three key elements; the LEI code, the person identification string, and the role string, to form a digital credential of a vLEI. The GLEIF database and repository provides a breakdown of key information on each registered legal entity, from the registered location, the legal entity name, as well as any other key information pertaining to the registered entity or its subsidiaries, as GLEIF states this is of “principally ‘who is who’ and ‘who owns whom’”(GLEIF eBook: The vLEI: Introducing Digital I.D. for Legal Entities Everywhere, GLEIF Website).

In December 2022, GLEIF launched their first vLEI services through proof-of-concept (POC) trials, offering instant digitally verifiable credentials containing the LEI. This is to meet GLEIF’s goal to create a standardised, digitised service capable of enabling instant, automated trust between legal entities and their authorised representatives, and the counterparty legal entities and representatives with which they interact” (GLEIF eBook: The vLEI: Introducing Digital I.D. for Legal Entities Everywhere, page 2).

“The vLEI has the potential to become one of the most valuable digital credentials in the world because it is the hallmark of authenticity for a legal entity of any kind. The digital credentials created by GLEIF and documented in the vLEI Ecosystem Governance Framework can serve as a chain of trust for anyone needing to verify the legal identity of an organisation or a person officially acting on that organisation’s behalf. Using the vLEI, organisations can rely upon a digital trust infrastructure that can benefit every country, company, and consumers worldwide”,

Karla McKenna, Managing Director GLEIF Americas

This new approach for the automated verification of registered entities will benefit many organisations and businesses. It will enhance and speed up regulatory reports and filings, due diligence, e-signatures, client onboarding/KYC, business registration, as well as other wider business scenarios.

Imagine the spear phishing example in the introduction. A spoofed email will not have a valid vLEI cryptographic signature, so can be rejected (even automatically), saving potentially thousands of £.

How do I get a vLEI?

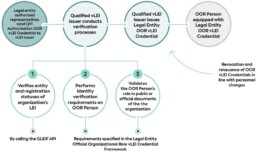

Registered financial entities can obtain a vLEI from a Qualified vLEI Issuer (QVI) organisation to benefit from instant verification, when dealing with other industries or businesses (Get a vLEI: List of Qualified vLEI Issuing Organisations, GLEIF Website).

A QVI organisation is authorised under GLEIF to register, renew or revoke vLEI credentials belonging to any financial entity. GLEIF offers a Qualification Program where organisations can apply to operate as a QVI. GLEIF maintain a list of QVIs on their website.

Source: GLIEF

What is the new ISO 5009:2022 and why is it relevant?

The International Organisation of Standards (ISO) published the ISO 5009 standard in 2022, which was initially proposed by GLEIF, for the financial services sector. This is a new scheme to address “the official organisation roles in a structured way in order to specify the roles of persons acting officially on behalf of an organisation or legal entity” (ISO 5009:2022, ISO.org).

Both ISO and GLEIF have created and developed this new scheme of combining organisation roles with the LEI, to enable digital identity management of credentials. This is because the ISO 5009 scheme offers a standard way to specify organisational roles in two types of LEI-based digital assets, being the public key certificates with embedded LEIs, as per X.509 (ISO/IEC 9594-8), also outlined in ISO 17442-2, or for digital verifiable credentials such as vLEIs to be specified, to help confirm the authenticity of a person’s role, who acts on behalf of an organisation (ISO 5009:2022, ISO Website). This will help speed up the validation of person(s) acting on behalf of an organisation, for regulatory requirements and reporting, as well as for ID verification, across various business use cases.

Leading Point have been supporting GLEIF in the analysis and implementation of the new ISO 5009 standard, for which GLEIF acts as the operating entity to maintain the ISO 5009 standard on behalf of ISO. Identifying and defining OORs was dependent on accurate assessments of hundreds of legal documents by Leading Point.

“We have seen first-hand the challenges of establishing identity in financial services and were proud to be asked to contribute to establishing a new standard aimed at solving this common problem. As data specialists, we continuously advocate the benefits of adopting standards. Fragmentation and trying to solve the same problem multiple times in different ways in the same organisation hurts the bottom line. Fundamentally, implementing vLEIs using ISO 5009 roles improves the customer experience, with quicker onboarding, reduced fraud risk, faster approvals, and most importantly, a higher level of trust in the business.”

Rajen Madan (Founder and CEO, Leading Point)

Thushan Kumaraswamy (Founding Partner & CTO, Leading Point)

How can Leading Point assist?

Our team of expert practitioners can assist financial entities to implement the ISO 5009 standard in their workflows for trade finance, anti-financial crime, KYC and regulatory reporting. We are fully-equipped to help any organisation that is looking to get vLEIs for their senior team and to incorporate vLEIs into their business processes, reducing costs, accelerating new business growth, and preventing anti-financial crime.

Glossary of Terms and Additional Information on GLEIF

Who is GLEIF?

The Global Legal Entity Identifier Foundation (GLEIF) was established by the Financial Stability Board (FSB) in June 2014 and as part of the G20 agenda to endorse a global LEI. The GLEIF organisation helps to implement the use of the Legal Entity Identifier (LEI) and is headquartered in Basel, Switzerland.

What is an LEI?

A Legal Entity Identifier (LEI) is a unique 20 alphanumeric character code based on the ISO-17442 standard. This is a unique identification code for legal financial entities that are involved in financial transactions. The role of the structure of how an LEI is concatenated, principally answers ‘who is who’ and ‘who owns whom’, as per ISO and GLEIF standards, for entity verification purposes and to improve data quality in financial regulatory reports.

How does GLEIF help?

GLEIF not only helps to implement the use of LEI, but it also offers a global reference data and central repository on LEI information via the Global LEI Index on gleif.org, which is an online, public, open, standardised, and a high-quality searchable tool for LEIs, which includes both historical and current LEI records.

What is GLEIF’S Vision?

GLEIF believe that each business involved in financial transactions should be identifiable with a unique single digital global identifier. GLEIF look to increase the rate of LEI adoption globally so that the Global LEI Index can include all global financial entities that engage in financial trading activities. GLEIF believes this will encourage market participants to reduce operational costs and burdens and will offer better insight into the global financial markets (Our Vision: One Global Identity Behind Every Business, GLEIF Website).

Séverine Raymond Soulier's Interview with Leading Point

Séverine Raymond Soulier’s Interview with Leading Point

Séverine Raymond Soulier is the recently appointed Head of EMEA at Symphony.com – the secure, cloud-based, communication and content sharing platform. Séverine has over a decade of experience within the Investment Banking sector and following 9 years with Thomson Reuters (now Refinitiv) where she was heading the Investment and Advisory division for EMEA leading a team of senior market development managers in charge of the Investing and Advisory revenue across the region. Séverine brings a wealth of experience and expertise to Leading Point, helping expand its product portfolio and its reach across international markets.

John Macpherson's Interview with Leading Point

John Macpherson’s Interview with Leading Point 2022

John Macpherson was the former CEO of BMLL Technologies; and is a veteran of the city, holding several MD roles at CITI, Nomura and Goldman Sachs. In recent years John has used his extensive expertise to advise start-ups and FinTech in challenges ranging from compliance to business growth strategy. John is Deputy Chair of the Investment Association Engine which is the trade body and industry voice for over 200+ UK investment managers and insurance companies.

Leading Point and P9 Form Collaboration to Accelerate Trade and Transaction Reporting

Leading Point and P9 Form Collaboration to Accelerate Trade and Transaction Reporting

Leading Point and Point Nine (P9) will collaborate to streamline and accelerate the delivery of trade and transaction reporting. Together, they will streamline the delivery of trade and transaction reporting using P9’s scalable regulatory solution, and Leading Point's data management expertise. This new collaboration will help both firms better serve their clients and provide faster, more efficient reporting.

London, UK, July 22nd, 2022

P9’s in-house proprietary technology is a scalable regulatory solution. It provides best-in-class reporting solutions to both buy- and sell-side financial firms, service providers, and corporations, such as ED&F Man, FxPro and Schnigge. P9 helps them ensure high-quality and accurate trade/transaction reporting, and to remain compliant under the following regimes: EMIR, MiFIR, SFTR, FinfraG, ASIC, CFTC and Canadian.

Leading Point, a highly regarded digital transformation company headquartered in London, are specialists in intelligent data solutions. They serve a global client base of capital market institutions, market data providers and technology vendors.

Leading Point are data specialists, who have helped some of the Financial Services industry’s biggest players organise and link their data, as well as design and deliver data-led transformations in global front-to-back trading. Leading Point are experts in getting into the detail of what data is critical to businesses. They deliver automation and re-engineered processes at scale, leveraging their significant financial services domain expertise.

The collaboration will combine the power of P9's knowledge of regulatory reporting, and Leading Point’s expertise in data management and data optimisation. The integration of Leading Point’s services and P9's regulatory technology will enable clients to seamlessly integrate improved regulatory reporting and efficient business processes.

Leading Point will organise and optimise P9’s client’s data sets, making it feasible for P9's regulatory software to integrate with client regulatory workflows and reporting. In a statement made by Christina Barbash, Business Development Manager at Point Nine, she claims that, “creating a network of best-in-breed partners will enable Point Nine to better serve its existing and potential clients in the trade and transaction reporting market.”

Andreas Roussos, Partner at Point Nine adds:

“Partnering with Leading Point is a pivotal strategic move for our organization. Engaging with consulting firms will not only give us a unique position in the market, but also allow us to provide more comprehensive service to our clients, making it a game-changer for our organization, our clients, and the industry as a whole.”

Dishang Patel, COO and Founding Partner at Leading Point, speaks on the collaboration:

“We are thrilled to announce that we are collaborating with Point Nine. Their technology and knowledge of regulatory reporting can assist the wider European market. The new collaboration will unlock doors to entirely new transformation possibilities for organisations within the Financial Sector across EMEA.”

The collaboration reflects the growing complexity of financial trading and businesses’ need for more automation for compliance with regulations, whilst ensuring data management is front and centre of the approach for optimum client success. Considering this, the two firms have declared to support organisations to improve the quality and accuracy of their regulatory reporting for all regimes.

About Leading Point

Leading Point is a digital transformation company with offices in London and Dubai. They are revolutionising the way change is done through their blend of expert services and their proprietary technology, modellr™.

Find out more at: www.leadingpoint.io

Contact Dishang Patel, Founding Partner & COO at Leading Point - dishang@leadingpoint.io

About Point Nine

Point Nine (Limassol, Cyprus), is a dedicated regulatory reporting firm, focusing on the provision of trade and transaction reporting services to legal entities across the globe. Point Nine uses its in-house cutting-edge proprietary technology to provide a best-in-class solution to all customers and regulatory reporting requirements.

Find out more at: www.p9dt.com

Contact Head office, Point Nine Data Trust Limited - info@p9dt.com

ESG Operating models hold the key to ESG compliance

John Macpherson on ESG Risk

In my last article, I wrote about the need for an effective operating model in the handling and optimisation of data for Financial Services firms. But data is only one of several key trends amongst these firms that would benefit from a digital operating model. ESG has risen the ranks in importance, and the reporting of this has become imperative.

The Investment Association Engine Program, which I Chair, is designed to identify the most relevant pain points and key themes amongst Asset and Investment Management clients. We do this by searching out FinTech businesses that are already working on solutions to these issues. By partnering with these businesses, we can help our clients overcome their challenges and improve their operations.

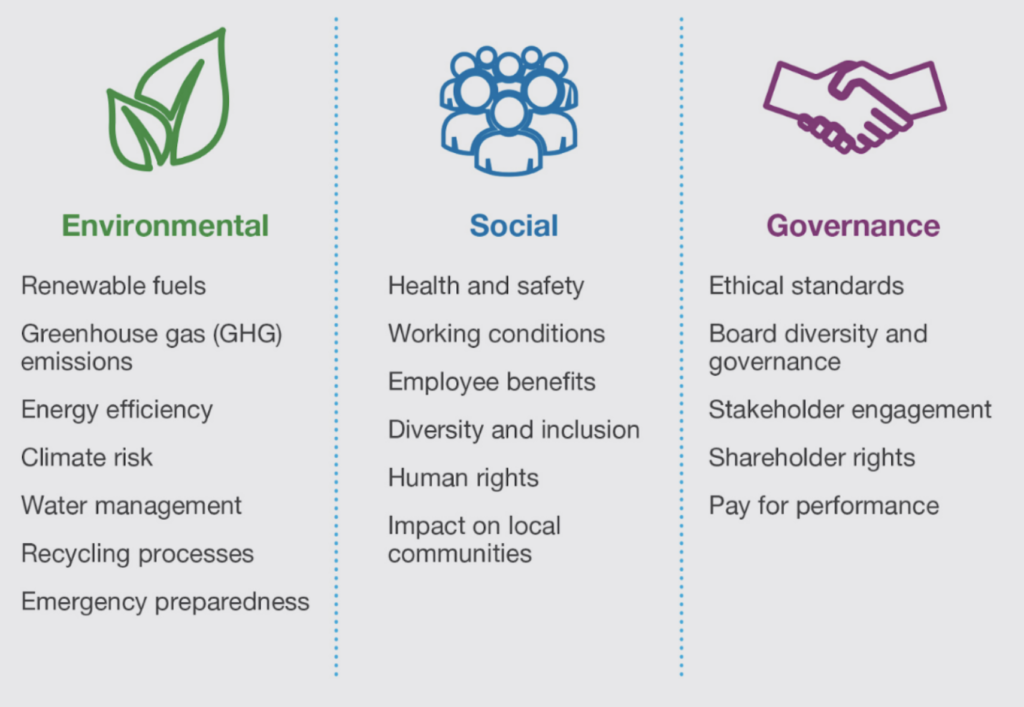

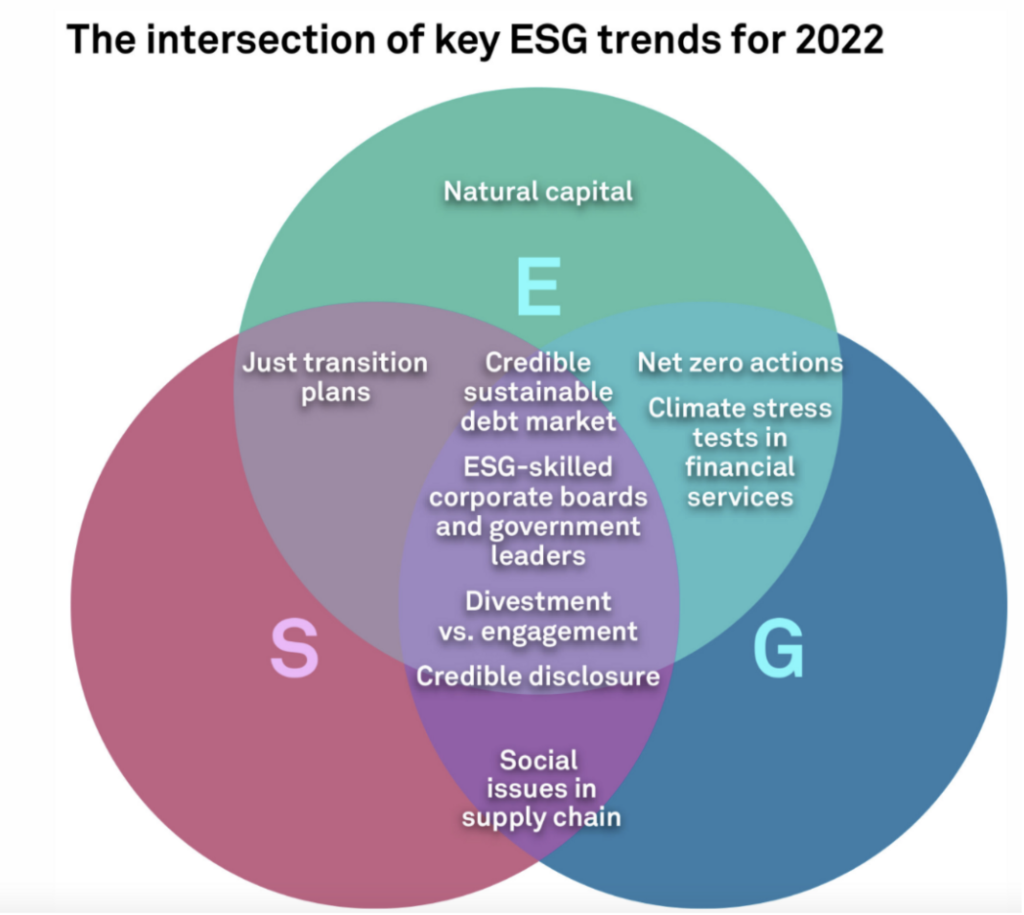

While data has been an ever-present issue, ESG has risen to an equal standing of importance over the last couple of years. Different regulatory jurisdictions and expectations worldwide has left SME firms struggling to comply and implement in a new paradigm of environmental, sustainable and governance protocols.

ESG risk is different to anything we have experienced before and does not fit into neat categories such as areas like operational risk. The depth and breadth of data and models required for firms to make informed strategic decisions varies widely based on the specific issue at hand (e.g., supply chain, reputation, climate change goals, etc.). Firms need to carefully consider their own position and objectives when determining how much analysis is needed.

According to S&P Global, sustainable debt issuance reached a record level in 2021, and is only expected to increase further in the coming years. With this growth comes increased scrutiny and a heightened concern of so-called ‘greenwashing’, where companies falsely claim to be environmentally friendly. To combat this, participants need to manage that growth in a way that combats rising concerns about ‘greenwashing’.

Investors, regulators and the public, in general, are keen to challenge large companies’ ESG goals and results. These challenges vary wildly, but the biggest seen on a regular basis range from human rights to social unrest and climate change. As organisations begin to decarbonise their operations, they face the initially overlooked challenge of creating a credible near-term plan that will enable them to reach their long-term sustainability goals.

Investor pressure on climate change has historically focussed on the Energy sector. Now central banks are trying to incorporate climate risk as a stress testing feature for all Financial Services firms.

Source: S&P Global

Operating models hold the key to ESG transition and compliance. Having an operating model for how each of the firm’s functions intersect with ESG, requires new processes, new data, and new reporting techniques. This needs to be pulled across the enterprise, so firms have a process that is substantiated.

Before firms worry about ESG scores from their market data providers, they would do well to look closely at their own operating model and framework. In this way, they can then pull in the data required from the marketplace and use it in anger.

Leading Point is a FinTech business I am proud to be supporting. Their operating model system, modellr™ describes how financial services businesses work, from the products and services offered, to the key processes, people, data, and technology used to deliver value to their customers. This digital representation of how the business works is crucial to show what areas ESG will impact and how the firm can adapt in the most effective way.

Rajen Madan, CEO at Leading Point:

“In many ways, the transition to ESG is exposing the acute gap in firms of not being able to have meaningful dialogue with the plethora of data they already have, and need, to further add to for ESG”.

modellr™ harvests a company’s existing data to create a living dashboard, whilst also digitising the change process and enabling quicker and smarter decision-making. Access to all the information, from internal and external sources, in real time is proving transformative for SME size businesses.

Thushan Kumaraswamy, Chief Solutions Officer at Leading Point:

“ESG is already one of the biggest drivers of transformation in financial services and is only going to get bigger. Firms need to identify the impact on their business, choose the right change option, execute the strategy, and measure the improvements. The mass of ESG frameworks adds to the confusion of what to report and how. Tools such as modellr™ bring clarity and purpose to the ESG imperative.”

While most firms will look to sustainability officers for guidance on matters around ESG, Leading Point are providing these officers, and less qualified team members, with the tools to make informed decisions now, and in the future. We have established exactly what these firms need to succeed – a digital operating model.

Words by John Macpherson — Board advisor at Leading Point and Chair of the Investment Association Engine

The Challenges of Data Management

John Macpherson on The Challenges of Data Management

I often get asked, what are the biggest trends impacting the Financial Services industry? Through my position as Chair of the Investment Association Engine, I have unprecedented access to the key decision-makers in the industry, as well as constant connectivity with the ever-expanding Fintech ecosystem, which has helped me stay at the cutting edge of the latest trends.

So, when I get asked, ‘what is the biggest trend that financial services will face’, for the past few years my answer has remained the same, data.

During my time as CEO of BMLL, big data rose to prominence and developed into a multi-billion-dollar problem across financial services. I remember well an early morning interview I gave to CNBC around 5 years ago, where the facts were starkly presented. Back then, data was doubling every three years globally, but at an even faster pace in financial markets.

Firms are struggling under the weight of this data

The use of data is fundamental to a company's operations, but they are finding it difficult to get a handle on this problem. The pace of this increase has left many smaller and mid-sized IM/ AM firms in a quandary. Their ability to access, manage and use multiple data sources alongside their own data, market data, and any alternative data sources, is sub-optimal at best. Most core data systems are not architected to address the volume and pace of change required, with manual reviews and inputs creating unnecessary bottlenecks. These issues, among a host of others, mean risk management systems cannot cope as a result. Modernised data core systems are imperative to solve where real-time insights are currently lost, with fragmented and slow-moving information.

Around half of all financial service data goes unmentioned and ungoverned, this “dark data” poses a security and regulatory risk, as well as a huge opportunity.

While data analytics, big data, AI, and data science are historically the key sub-trends, these have been joined by data fabric (as an industry standard), analytical ops, data democratisation, and a shift from big data to smaller and wider data.

Operating models hold the key to data management

Governance is paramount to using this data in an effective, timely, accurate and meaningful way. Operating models are the true gauge as to whether you are succeeding.

Much can be achieved with the relatively modest budget and resources firms have, provided they invest in the best operating models around their data.

Leading Point is a firm I have been getting to know over several years now. Their data intelligence platform modellr™, is the first truly digital operating model. modellr™ harvests a company’s existing data to create a living operating model, digitising the change process, and enabling quicker, smarter, decision making. By digitising the process, they’re removing the historically slow and laborious consultative approach. Access to all the information in real-time is proving transformative for smaller and medium-sized businesses.

True transparency around your data, understanding it and its consumption, and then enabling data products to support internal and external use cases, is very much available.

Different firms are at very different places on their maturity curve. Longer-term investment in data architecture, be it data fabric or data mesh, will provide the technical backbone to harvest ML/ AI and analytics.

Taking control of your data

Recently I was talking to a large investment bank for whom Leading Point had been brought in to help. The bank was looking to transform its client data management and associated regulatory processes such as KYC, and Anti-financial crime.

They were investing heavily in sourcing, validating, normalising, remediating, and distributing over 2,000 data attributes. This was costing the bank a huge amount of time, money, and resources. But, despite the changes, their environment and change processes had become too complicated to have any chance of success. The process results were haphazard, with poor controls and no understanding of the results missing.

Leading Point was brought in to help and decided on a data minimisation approach. They profiled and analysed the data, despite working across regions and divisions. Quickly, 2,000 data attributes were narrowed to less than 200 critical ones for the consuming functions. This allowed the financial institutions, regulatory, and reporting processes to come to life, with clear data quality measurement and ownership processes. It allowed the financial institutions to significantly reduce the complexity of their data and its usability, meaning that multiple business owners were able to produce rapid and tangible results

I was speaking to Rajen Madan, the CEO of Leading Point, and we agreed that in a world of ever-growing data, data minimisation is often key to maximising success with data!

Elsewhere, Leading Point has seen benefits unlocked from unifying data models, and working on ontologies, standards, and taxonomies. Their platform, modellr™is enabling many firms to link their data, define common aggregations, and support knowledge graph initiatives allowing firms to deliver more timely, accurate and complete reporting, as well as insights on their business processes.

The need for agile, scalable, secure, and resilient tech infrastructure is more imperative than ever. Firms’ own legacy ways of handling this data are singularly the biggest barrier to their growth and technological innovation.

If you see a digital operating model as anything other than a must-have, then you are missing out. It’s time for a serious re-think.

Words by John Macpherson — Board advisor at Leading Point, Chair of the Investment Association Engine

John was recently interviewed about his role at Leading Point, and the key trends he sees affecting the financial services industry. Watch his interview here

Leading Point Shortlisted For Data Management Insight Awards

Leading Point has been shortlisted for the A-Teams Data Management Insight Awards.

Data Management Insight Awards, now in their seventh year, are designed to recognise leading providers of data management solutions, services and consultancy within capital markets.

Leading Point has been nominated for four categories:

- Most Innovative Data Management Provider

- Best Data Analytics Solution Provider

- Best Proposition for AI, Machine Learning, Data Science

- Best Consultancy in Data Management

Areas of Outstanding Service & Innovation

Leading Form Index: Data readiness assessment, created by Leading Point FM, which measures firms data capabilities and their capacity to transform across 24 unique areas. This allows participating firms to understand the maturity of their information assets, the potential to apply new tech (AI, DLT) and benchmark with peers.

Chief Risk Officer Dashboard: Management Information Dashboard that specifies, quantifies, and visualises risks arising from firms’ non-financial, operational, fraud, financial crime, and cyber risks.

Leading Point FM ‘Think Fast’ Application: The application provides the ability to input use cases and solution journeys and helps visualise process, systems and data flows, as well as target state definition & KPI’s. This allows business change and technology teams to quickly define and initiate change management.

Anti-Financial Crime Solution: Data centric approach combined with Artificial Intelligence technology reimagines and optimises AML processes to reduce volumes of client due diligence, reduce overall risk exposure, and provide the roadmap to AI-assisted automation.

Treasury Optimisation Solution: Data content expertise leveraging cutting edge DLT & Smart Contract technology to bridge intracompany data silos and enable global corporates to access liquidity and efficiently manage finance operations.

Digital Repapering Solution: Data centric approach to sourcing, management and distribution of unstructured data combined with NLP technology to provide roadmap towards AI assisted repapering and automated contract storage and distribution.

Leading Form Practical Business Design Canvas: A practical business design method to describe your business goals & objectives, change projects, capabilities, operating model, and KPI’s to enable a true business-on-a-page view that is captured within hours.

ISO 27001 Certification – Delivery of Information Security Management System (ISMS) & Cyber risk mitigation with a Risk Analysis Tool

What COP26 means for Financial Services

What COP26 means for Financial Services

Many have proclaimed COP26 as a failure, with funding falling short, loose wording and non-binding commitments. However, despite the doom and gloom, there was a bright spot; the UK’s finance industry.

Trillions need to be invested to achieve the 1.5 degrees target, but governments alone do not have the funds to achieve this. Alternative sources of finance must be found, and private investment needs to be encouraged on all fronts to, ‘go green’. Looking at supply-side energy alone, the IPPC estimates that up to $3.8 trillion needs to be mobilised annually to achieve the transition to net-zero by 2050.

The UK led from the front in green finance, introducing plans to become the world’s first net-zero aligned financial centre. New Treasury rules for financial institutions, listed on the London Stock Exchange, mean that companies will have to create and publish net-zero transition plans by 2023, although the full details are yet to be announced. These plans will be evaluated by a new institution, but crucially, are not mandatory. The adjudicator of the investment plans will be investors. Although some argue the regulation could be stronger, just like national climate targets, once there are institutions publishing their alignment with net-zero, there is a level of accountability that can be scrutinised and a platform for comparison which encourages competition. Anything stronger could have pushed investment firms into less-regulated exchanges.

Encouragingly, the private sector showed strong engagement, with nearly 500 global financial services firms agreeing to align $130 trillion — around 40% of the world’s financial assets — with the goals set out in the Paris Agreement, including limiting global warming to 1.5 degrees Celsius.

From large multinational companies, to small local businesses, the summit provided greater clarity on how climate policies and regulations will shape the future business environment. The progress made, on phasing out fossil fuel subsidies and coal investments, was a clear signal to the global market about the future viability of fossil fuels. It will now be more difficult to gain funding to expand existing or build new coal mines. Over time, this adjustment will have wider impacts on the funding of other polluting industries.

This new framework will give the private sector the confidence and certainty it needs to invest in green technology and green energy. Renewable energy is already the cheapest form of energy in 2/3 of the world. This reassurance will be crucial in driving the economies of scale we need, within the renewable energy industry.

A truly sustainable future is still a long way off. The private sector will still invest in fossil fuels, new regulations will cause challenges, and ESG remains optional; but initial signals from COP26 show that the future of the world is looking green.

By Maria King — ESG Associate at Leading Point

Who we are:

Leading Point is a fintech specialising in digital operating models. We are revolutionising the way operating models are created and managed through our proprietary technology, modellr™, and expert services delivered by our team of specialists.[/vc_column_text][/vc_column][/vc_row]

GDFM & Leading Point Partnering for Smarter Regulatory Health Management

GDFM and Leading Point collaborate to deliver innovative and efficient regulatory risk management to our clients and through the SMART_Dash product; enabling consistent, centralised, accessible regulatory health data to assist responsible and accountable individuals with ensuring adequate transparency, for risk mitigation decision making and action taking. This is complemented by a SMART_Board suite for Board level leadership and a more detailed SMART_Support suite for regulatory reporting teams.

We are delighted that SMART_Dash has been shortlisted in 3 categories in this year's prestigious RegTech Insight Awards in Europe, which recognises both established solution providers and innovative newcomers, seeking to herald and highlight innovative RegTech solutions across the global financial services industry.

GD Financial Markets Head of Regulatory Compliance Practice and SMART_Dash Co-creator Sarah Peaston "Centralised, consolidated, consistent regulatory health transparency and tracking is key to identifying and managing regulatory and operating risk. I am delighted that SMART_Dash has been recognised as a new breed of solution that practically assists Managers, Senior Managers and Leadership with managing their regulatory health through the provision of the right information, at the right level to the right seniority”.

Leading Point CEO Rajen Madan "Our vision with SMART_Dash is to accelerate better regulatory risk management approaches and vastly more efficient RegOps. As financial services practitioners we are acutely aware of the time managers spend trying to make sense of their regulatory and operating risk areas from a multitude of inconsistent reports. SMART_Dash enables the shift to an enhanced way of risk management, which creates standardisation and makes reg data work for your business. We are very grateful to the COO, CRO and CFOs whom have contributed to its development and help the industry move forward”.

GDFM and Leading Point are rolling out the SMART_Dash suite to the first set of industry consortium partners progressively in H1 2021, and thereafter open to a wider set of institutions.

The Composable Enterprise: Improving the Front-Office User Experience

[et_pb_section fb_built="1" _builder_version="4.4.8" min_height="1084px" custom_margin="16px||-12px|||" custom_padding="0px||0px|||"][et_pb_row column_structure="2_3,1_3" _builder_version="3.25" custom_margin="-2px|auto||auto||" custom_padding="1px||3px|||"][et_pb_column type="2_3" _builder_version="3.25" custom_padding="|||" custom_padding__hover="|||"][et_pb_social_media_follow url_new_window="off" follow_button="on" _builder_version="4.4.8" text_orientation="left" module_alignment="left" min_height="14px" custom_margin="1px||5px|0px|false|false" custom_padding="0px|0px|0px|0px|false|false" border_radii="on|1px|1px|1px|1px"][et_pb_social_media_follow_network social_network="linkedin" url="https://uk.linkedin.com/company/leadingpoint" _builder_version="4.4.8" background_color="#007bb6" follow_button="on" url_new_window="off"]linkedin[/et_pb_social_media_follow_network][/et_pb_social_media_follow][et_pb_image src="https://leadingpointfm.com/wp-content/uploads/2020/10/cloud-based-services.png" title_text="cloud-based-services" align_tablet="center" align_phone="" align_last_edited="on|desktop" admin_label="Image" _builder_version="4.4.8" locked="off"][/et_pb_image][/et_pb_column][et_pb_column type="1_3" _builder_version="3.25" custom_padding="|||" custom_padding__hover="|||"][/et_pb_column][/et_pb_row][et_pb_row column_structure="1_2,1_2" _builder_version="4.4.8"][et_pb_column type="1_2" _builder_version="4.4.8"][et_pb_text _builder_version="4.4.8" text_font="||||||||" text_font_size="14px" text_line_height="1.6em" header_font="||||||||" header_font_size="25px" width="100%" custom_margin="10px|-34px|-5px|||" custom_padding="16px|0px|5px|8px||" content__hover_enabled="off|desktop"]

By Dishang Patel, Fintech & Growth Delivery Partner, Leading Point Financial Markets.